17 february 2023

Highly automated driving is expected to reduce the accident risk occurrence by human errors. However, research suggests that this type of driving can also increase driver distraction, which would reduce the efficiency to recover the manual control of the vehicle when needed. This situation can happen, for instance, when a variable message sign (VMS) informs about a near and sudden danger, and the distracted driver doesn’t pay attention to the message.

Nowadays, it is possible for drivers to receive auditory messages with updated information relevant to their travel, for example, providing inside the vehicle the information displayed via text and/or pictograms on VMS. Some studies suggest that when the information shown via text on a VMS is also received in audio form (i.e., audiovisual rather than visual-only), the driver is more adept at distinguishing between non-critical messages, which don’t require an immediate response, and critical messages, which do.

Nevertheless, these previous studies have not investigated whether this result would also occur when the driver is distracted with an activity involving both vision and hearing, which can happen, for example, during automated driving (Fig. 1). Therefore, the first objective of this work was to compare the effectiveness in identifying the message on the VMS for audiovisual and visual-only modalities. The second objective was to explore the potential use of the audiovisual modality to assist drivers of highly automated vehicles in identifying critical situations.

|

|

| Figure 1. Watching a television series during autonomous driving. |

How was the study conducted?

24 volunteers participated in a driving simulator study with the autonomous mode active (Fig. 2). During the route, an episode of a television series was projected on a table integrated into the simulator.

|

|

| Figure 2. Frontal view of the simulated route (the green symbol indicating the activation of autonomous mode appears bottom-right). |

Along the track, 40 VMS were presented, both in audiovisual and visual-only form. Participants had to complete two tasks: (a) pay attention to the TV series; and (b) recover the manual control of the car if the VMS message was a ‘critical message.’ At the end of the experiment they were asked about the TV series, so as to determine the degree of attention payed to it. With the purpose to know if the goals of the work were accomplished, both behavioral (accuracy and speed of participants’ responses) and psychophysiological (eye movements) variables were analyzed.

What has been found?

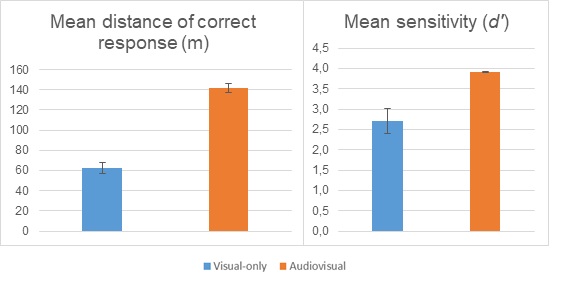

General results showed that, when the audio was available, the participants: (a) had a higher ability to discriminate the VMS messages, and (b) responded earlier (Fig. 3). Besides, their gaze pattern on the road was more efficient: they looked at the road half as often, took longer to do so, and when they did, they spent less time on it (Fig. 4).

|

|

| Figure 3. Mean distance of correct responses and sensitivity by type of message (audiovisual and visual-only). |

|

|

|

Figure 4. Road glances by type of message (audiovisual and visual-only). |

What can be concluded?

To conclude, the processing of traffic messages improves when they are provided as both auditory and visual messages. These results would be of special interest for engineers designing highly automated cars, considering that the design of automated systems must ensure that the driver’s attention is sufficient to take over control.

Associated publication: Pi-Ruano, M., Roca, J. & Tejero, P. (2024). Audiovisual presentation of variable message signs improves message processing in distracted drivers during partially automated driving. Journal of Safety Research. Doi: https://doi.org/10.1016/j.jsr.2024.01.014

You can download the paper about the study here: https://authors.elsevier.com/sd/article/S0022-4375(24)00014-8

Funding: This publication is part of the R+D+i project PID2019-106562GB-I00, funded by MCIN/AEI/10.13039/501100011033, and the AICO/2021/290 project, funded by the Conselleria de Educación, Universidades y Empleo (Generalitat Valenciana). In addition, Marina Pi-Ruano is the beneficiary of a predoctoral contract (ACIF/2019/160), funded by the Conselleria d’Innovació, Universitats, Ciència i Societat Digital and the European Social Fund.

|

|

||

|

|

|